Distrubuted Simulation

Motivation

UE’s multiplayer capabilities are used in networked games such as Fortnite which has over 350 million players and allows up to 100 players to play against each other.

By combining UE’s multiplayer game feature, which has a proven track record in games, with rclUE, it is possible to build a distributed simulator environment for ROS2-compatible robots.

Through the usage of a distributed simulator, the load on the simulation server can be dispersed across multiple machines, thereby when compared to running simulations on a single machine, it will enable the simulation of robots on a large scale, such as an entire warehouse.

Architecture Overview

Client-Server Model

UE multiplayer is based around the client-server model. Each player is a client connected to a single server. In the robotics simulator, we put robots in each client.

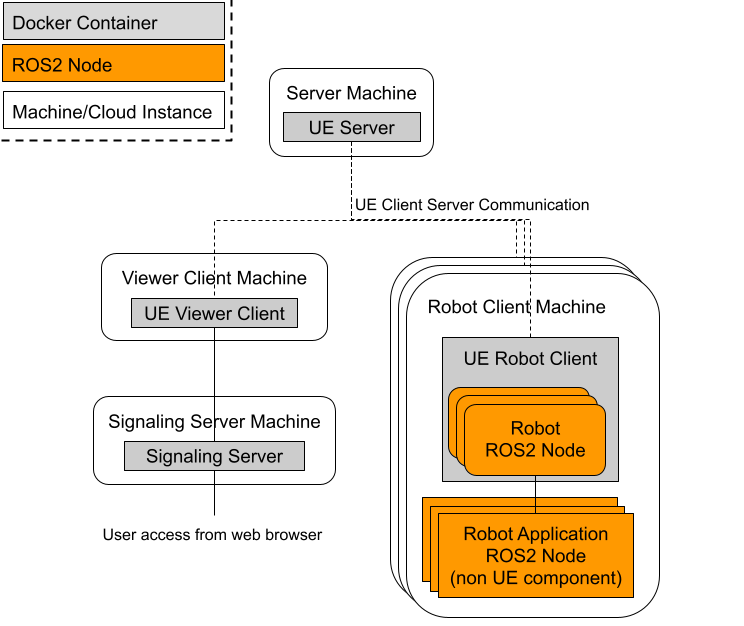

Figure 1: Overview of the configuration.

As seen in figure 1, the simulator consists of four types of instances.

Server: sync all state except for ROS Components in the clients.

Robot client: own multiple simulated robots and robot application software.

Viewer client: in charge of rendering with GPU.

Signaling server: provide the view of simulation.

This configuration allows the ROS 2 node of a specific robot (Robot ROS 2 Node) and ROS application software to be separated from the simulation server.

In addition, the rendering can also be separated from the server by using the viewer client to perform the rendering, reducing GPU usage on the server and client. There is also a signaling server that will run alongside the viewer client instance, the role of which is to provide the user with a view of the simulation over the internet.

RPCs and Replication

Communication between Server and Client uses UE’s replication and RPCs.

Replication is the process to sync data between the server and the clients. Changes of Actors and properties set as “replicated” in server are synced to the clients. Replication is a one-way process from server to client to sync data such as Actor pose and other properties.

RPCs (Remote procesure call) is a way to call function in client from server or the other way around. While replication provide a way to sync data from server to clients, RPC provide a way to change Actor status in the server from clients. RPC function which are defined in NetworkPlayerController or NetworkPlayerController owned pawn are valid and only replciated Actor can call the function.

In this distributed simulation setup,

ROS 2 nodes are spawned only in the clients, i.e. replication are not used.

Some ROS 2 components are spawned with robot at server and replicated to the clients but initialized, e.g. when starting publsiher/subscriber, only in the client which sends the spawn requests.

Robot movements happen in the clients first and use rpc via NetworkPlayerController to sync the robot movement in the server.

Detailed Architecture

Basic flows between client and server

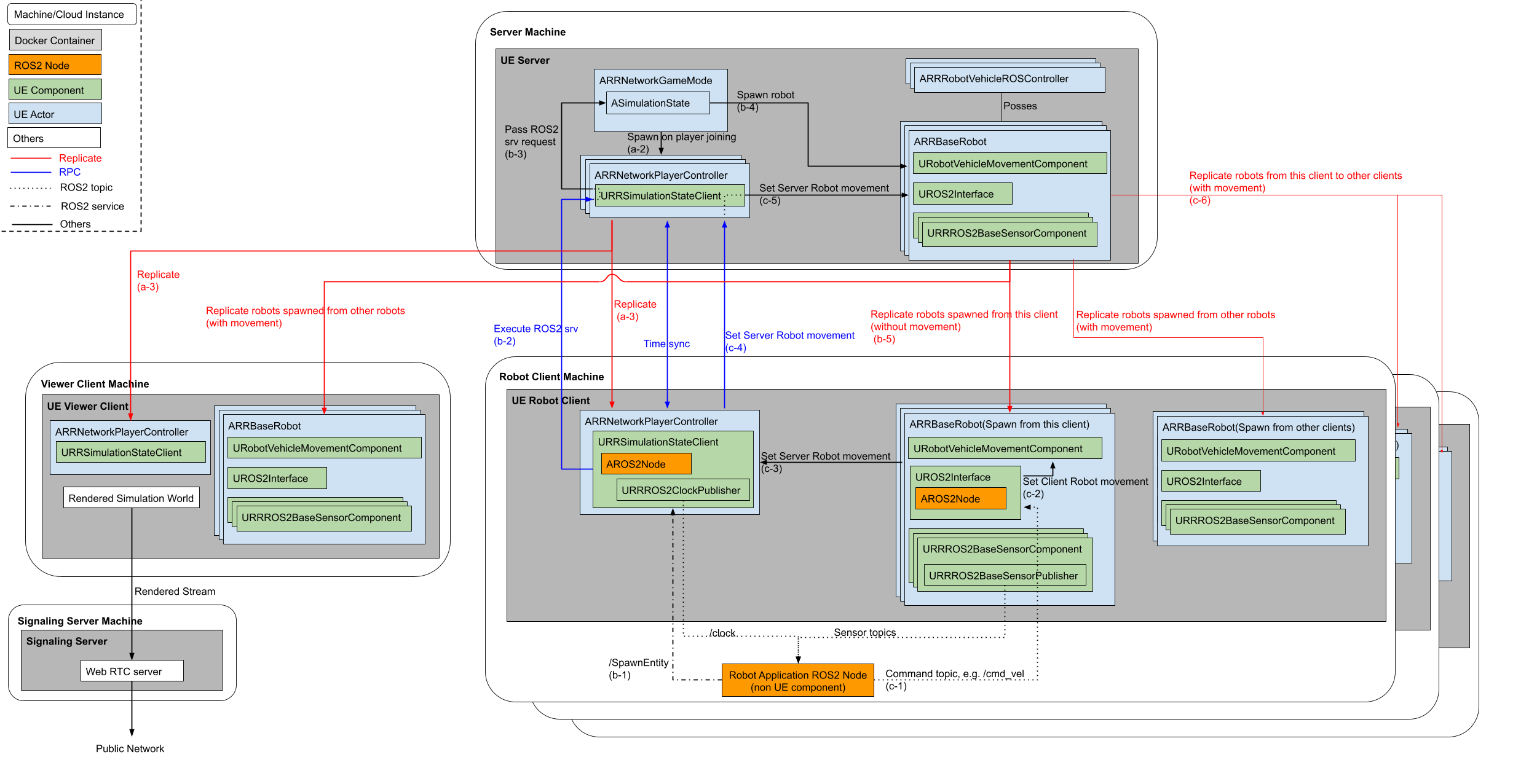

Figure 2: Detailed architecture of distributed simulation

When NetworkPlayer pass the request to the server, it sends current pose, velocity command and client timestamp at that time. Server will compensate network delay by comparing current server timestamp and client timestamp.

Server

The Server’s responsibilities include the handling of the Simulation State and subsequently relaying all of this information to each client. When the simulation begins, the UE Game mode is launched with the responsibility to start all the simulation components, mainly, the Simulation State. The Game mode also spawns a new Player Controller whenever a Client is connected.

A Player Controller plays a role to use RPCs between the server and clients.

The Simulation State in the server is responsible for spawning any robots, it does this by following requests sent by an external robot software to the ROS 2 Service attached to the Simulation State Client in the each client.

These components are first created on the Server itself, such that the server will have a master copy of all entities in the simulation. After which for all the connected clients the server will then replicate all of these entities to each as well as any relevant data for each entity.

Robot Client

Thus each client will then have a replicated copy of its own relevant Player Controller (this is only shared between the specific client and the server, only the server has a copy of all the player controllers), as well as a copy of all other entities that the server is keeping track of.

This replicated Player Controller to use RPCs and judge spawn request comes from that client or not by using their unique PlayerId.

Robot (child class of RRBaseRobot) has functionality to regulate client-side actions such as the spawning and the general setup of various components on the client, like their ROS 2 node, as well as any attached sensors that will publish to this ROS 2 node.

As the ROS 2 node is spawned client-side only, there is no copy on the server and can thereby serve just the ROS 2 topics that are relevant to the client and its associated robot. Since robot components such as sensors don’t need to be shared among other robots, the workload of the simulated sensors on the robot are performed client-side to take advantage of this and thus reduce computational load on the server.

Additionally this structure provides another benefit, as each client has its own ROS 2 node that is solely responsible for interacting with the robot software, we are able to more closely emulate cases such as: a real robot setup where the robot’s software is on the robot itself (by having both pieces of software run on the same node/container), or one where the software interacts with the robot remotely (such as the software being on the cloud or another remote device).

Viewer Client (PixelStreaming)

Due to the nature of this setup intended for being utilized in a cloud environment, we need a method to allow the user to view the simulation. This is done through the utilization of pixelstreaming[5] in a separate client image. Pixelstreaming essentially allows the client to stream its rendered output to a user through the usage of a signaling server. This signaling server is the middleman between the user and the pixelstreaming client, allowing the user to access and interact with the client through a simple web interface. As we intend for these images to be used in a cloud environment, having a web interface allows for the user to interact with the simulation without having to set up a local client instead. This pixelstreaming instance will use the most GPU computation of all the different instance types as it is required to render all the images before it is sent to the Signaling server which then forwards to the user.

Structurally on the cloud there will be a single server image and a single viewer client, there will be a robot client image for each robot that the user intends to add, this can be seen in the diagram below. Additionally as stated before, the server and the robot client images will be deployed on compute nodes that don’t necessarily have a GPU, however, a GPU is required by the viewer client.

Example

turtlebot3-UE repository has a example of distributed simulation. LargeGround map has RRNetworkGame mode and large enough to spawn multiple turtlebot.

Video 1: Distributed simulation example with TurtleBots

You can see the LiDAR points appear in the specific client, which measn ROS Component is spawn/initiated in specific client only.

Process to test client server

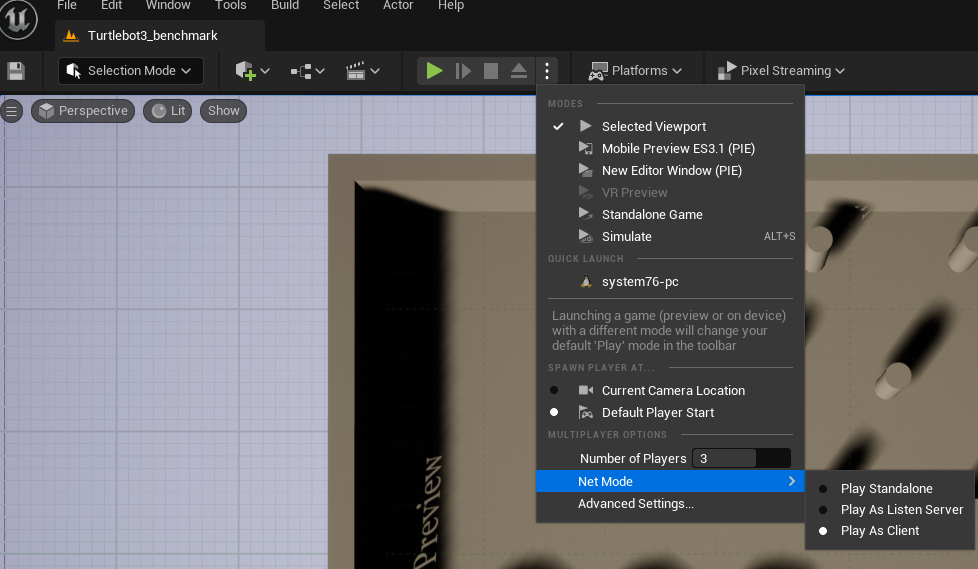

Open the LargeGroundMap

Make sure the GameMode is RRNetworkGameMode and Player setting, i.e. Net Mode is “Play As Client”

Press Play.

Send ROS 2 spawn request.

Figure 3: Process to test client server

Note

Editor specific setting

Simulation state client add namespace “NetworkPC<n>” to the ROS 2 Service to differentiate services in each client. If you play without editor, the namespace is not added.

First client will becomve viewer client. Client becomes robot client from second client.

Spawn request from ROS2

RapyutaSimulationPlugins/ExternalTest/rr_sim_tests has a example client script test_random_spawn.py which sends spawn request with random initial pose and sends random cmd_vel.

ROS 2 run rr_sim_tests test_random_spawn test --ros-args -p robot_name:=tb11 --service_namespace:=NetworkPC1